Introduction

For companies operating globally or expanding into new markets, reliable location data isn’t just a nice-to-have – it’s a critical foundation for success. Whether you’re validating addresses, optimizing delivery routes, or analyzing market coverage, the quality of your location data directly impacts your operational efficiency and bottom line.

As businesses increasingly rely on location-based services, many turn to open-source location databases as a starting point. These resources can seem attractive due to their accessibility and zero upfront cost. However, understanding their capabilities, limitations, and appropriate use cases is crucial for making informed decisions about your location data strategy.

💡 Use accurate location data to create your strategic plan and expand your business. For over 15 years, we have made the most comprehensive worldwide zip code database for address validation, supply chain data management, geocoding, map data visualization, etc. Browse GeoPostcodes datasets and download a free sample here.

This article will guide you to explore the world of open-source location data and examine how it fits into the broader landscape of location intelligence solutions.

| Source | OpenStreetMap (OSM) | GeoNames | US ZIP Code Database | HDX | Python Libraries | Natural Earth | Database of Global Administrative Areas (GADM) |

|---|---|---|---|---|---|---|---|

| Geographic Coverage | Global | 96 countries | The US only | Global (humanitarian focus) | Varies by library (mostly US-focused, except Geopy) | Global | Global |

| Update Frequency | Real-time community updates | Varies by region | Irregular updates | Crisis-driven updates | Varies (Pyzipcode: limited, Uszipcode: semi-regular, Geopy: API-dependent) | Periodic but not frequent | Periodic releases (not real-time) |

| Data Validation | Community-driven | Basic validation | USPS-maintained but may lag | Strict UN protocols | Basic validation (Pyzipcode), Enhanced (Uszipcode, Geopy API-based) | Standardized but may not reflect the latest changes | Curated by researchers |

| Postal Code Coverage | Limited | Comprehensive but may be outdated | Complete US coverage | Varies by region | Pyzipcode: Basic US, Uszipcode: Comprehensive US, Geopy: API-dependent | No postal codes | None (administrative boundaries only) |

| Integration Capabilities | Extensive API support | Basic API access | Database download | API and bulk download | Python libraries (some require database downloads) | GIS-compatible formats (Shapefile, GeoJSON) | GIS-compatible formats (Shapefile, GeoJSON, etc.) |

| Best Use Case | Digital mapping and routing | Global place name lookup | US address validation | Humanitarian operations | Python-based postal lookups, geocoding, spatial analysis | Cartography and global geographic reference | Administrative boundary analysis |

| Data Format | Multiple formats (XML, JSON, etc.) | Text/CSV | CSV /Database | Multiple standardized formats | Python objects (Pyzipcode, Uszipcode), API responses (Geopy), Geometric data (Shapely) | Shapefile, GeoJSON | Shapefile, GeoPackage, KMZ, R-raster |

| Technical Expertise Required | Moderate to High | Low to Moderate | Low | Moderate | Low to Moderate (varies by library) | Low | Moderate |

| Additional Features | Points of interest, roads, buildings | Place names, elevation data | Basic demographic data | Humanitarian indicators | Geopy: Global geocoding, Uszipcode: US Demographics, Shapely: Spatial analysis | Physical geography, administrative boundaries | Multi-level administrative hierarchies |

| Limitations | Inconsistent structure, rural coverage gaps | Outdated postal codes, country disparities | US-only coverage, no global equivalent | Crisis-area focus, inconsistent structure, misaligned shapes | Pyzipcode is outdated, Uszipcode is US-only, Geopy relies on external APIs | Low-resolution boundaries, outdated in some regions | Commercial use restrictions, no postal data |

Factors for Evaluating Open-Source Location Databases

Understanding how to evaluate open-source location data becomes crucial as your business requirements grow. Let’s examine the critical factors that should influence your decision-making process.

Data Quality and Accuracy

Data quality in open-source location databases operates on multiple levels. Beyond basic accuracy, businesses need to consider completeness, consistency, and timeliness.

Think About It: When evaluating data quality, consider these questions:

- How complete is the coverage in your target markets?

- What verification processes are in place?

- How quickly are updates implemented?

- What is the source of the original data?

- Can I legally use this data commercially?

- Can I keep this data accurate and up-to-date?

- Will I need support in ensuring the quality of the data as well as implementing it?

The reality is that open-source data quality varies significantly based on region and contributor activity. Urban areas typically show higher quality due to more frequent updates and verification, while rural areas may suffer from outdated or incomplete information.

Maintenance and Updates

The maintenance model of open-source location data presents unique challenges. Unlike commercial solutions with dedicated update schedules, open-source databases rely on community contributions and voluntary maintenance.

Real-World Impact: Changes in postal codes, street names, or administrative boundaries can have immediate business implications. A delay in updating this information can lead to failed deliveries, customer dissatisfaction, and increased operational costs.

Data Format and Standardization

The challenge of data standardization becomes especially evident when businesses integrate open-source location data from multiple sources. Differences in attribute definitions, table structures, coordinate projections, and geometry types can introduce inconsistencies that require significant preprocessing before use.

For example, administrative boundaries from different datasets may use varying classification levels—one source might define a “region” as a first-level administrative division, while another labels it as a “province.” Similarly, some datasets provide polygon geometries for boundaries, while others only include centroids. These inconsistencies make direct comparisons and integrations difficult without additional transformation efforts.

Even within the same country, location datasets may use different projections, making spatial analysis challenging without proper conversion. Some sources use latitude/longitude (WGS84), while others rely on national coordinate reference systems.

Important Considerations for Open-Source Location Databases:

- Can I keep this data accurate and up-to-date? Open-source datasets evolve at different rates. Some may update in real-time (OSM), while others are refreshed sporadically (GeoNames, Natural Earth). Businesses must establish processes for monitoring updates and integrating new data.

- Will I need support in ensuring the quality of the data as well as implementing it? Managing location data quality requires dedicated resources, either in-house expertise or external support. Organizations should assess whether they have the technical capacity to clean, validate, and maintain the data or if they need third-party solutions to ensure ongoing accuracy.

Successful use of open-source location data depends on acquiring the data and actively maintaining and refining it to meet business needs.

Data Licensing and Usage Rights

It is important to note that open-source data does not mean you may use it for everything. Each dataset is available through specific license terms, indicating what use of the data is permitted. It is critical that you check the license before working with the data.

Here’s a summary of the licensing terms for the major open-source location databases:

| Database | License | Key Restrictions |

|---|---|---|

| OpenStreetMap (OSM) | Open Database License (ODbL) | Requires attribution and share-alike (derivative works must use same license) |

| GeoNames | Creative Commons Attribution 4.0 | Requires attribution; some datasets have additional restrictions |

| US ZIP Code Database | USPS licensing terms | Restrictions on redistribution and commercial use |

| HDX | Various (dataset-dependent) | Some datasets restricted to humanitarian/non-commercial use |

| Natural Earth | Public Domain | No restrictions, free for any use |

| GADM | Academic/Non-commercial license | Commercial use requires special permission |

For businesses, failing to comply with these licenses can result in legal and financial risks. Before using open-source location data commercially, it’s essential to:

- Verify if commercial use is permitted

- Thoroughly review the specific license terms

- Ensure compliance with attribution requirements

- Check if derived products must be shared under the same license

Top Open-Source Location Databases

In this section, we’ll explore seven major open-source location databases, each offering distinctive capabilities for different business applications. Understanding how each database’s features align with your specific requirements will help you determine which resources best complement your location data strategy.

Before diving into specific databases, it’s helpful to understand the different types of geospatial data they contain:

- Point Data: Single locations represented by coordinates (latitude/longitude), such as city centers, addresses, or points of interest.

- Line Data: Connected points forming features like roads, rivers, or boundaries.

- Polygon Data: Closed shapes representing areas like administrative boundaries, postal code zones, or building footprints.

- Text/Attribute Data: Non-spatial information attached to geographic features, such as place names, postal codes, or demographic information.

Each database below contains different combinations of these data types, making them suitable for different business applications.

OpenStreetMap (OSM)

OpenStreetMap is one of the most recognized names in open-source mapping and geospatial data. Think of it as the Wikipedia of maps – a collaborative project where volunteers worldwide contribute geographic data. This crowdsourced approach has created an extensive database of roads, buildings, and points of interest.

The community-driven nature of OSM creates both advantages and challenges. You’ll find detailed, frequently updated information in urban areas where contributor density is high. Major cities often have street-level accuracy that rivals commercial solutions. However, this same characteristic leads to significant variations in rural areas, where fewer contributors mean less frequent updates and potential gaps in coverage.

Another challenge is the lack of a standardized structure across regions—or even within the same country. Different mapping conventions, varying levels of detail, and inconsistent tagging can make it challenging to work with OSM data at scale. This often requires significant data cleaning and normalization before integration into business applications.

Consider this real-world scenario: A logistics company initially used OSM for European route planning. While the system worked well in major cities, drivers frequently encountered outdated or missing information in rural areas, leading to delivery delays and customer dissatisfaction. Additionally, inconsistencies in road classifications and address formats across countries complicated automated processing, requiring additional data transformation efforts.

| Feature | Details |

|---|---|

| Geographic Coverage | Global |

| Update Frequency | Real-time community updates |

| Data Validation | Community-driven |

| Postal Code Coverage | Limited |

| Integration Capabilities | Extensive API support |

| Best Use Case | Digital mapping and routing |

| Data Format | Multiple formats (XML, JSON, etc.) |

| Technical Expertise Required | Moderate to High |

| Additional Features | Points of interest, roads, buildings |

| Limitations | Inconsistent structure, rural coverage gaps |

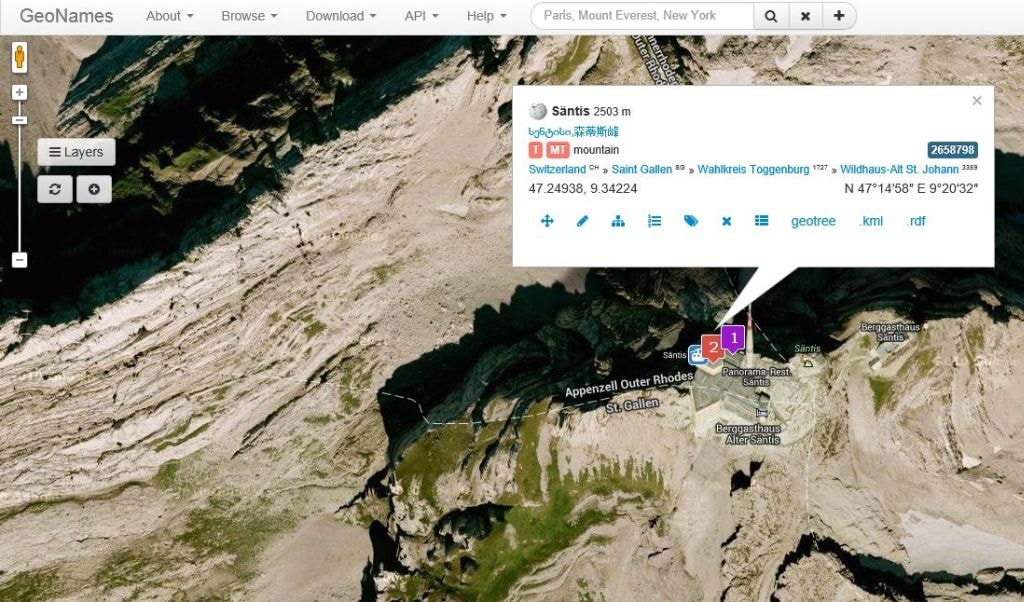

GeoNames

GeoNames represents another significant player in the open-source location data landscape. This database links places to postal codes across multiple countries, offering a foundation for basic location services.

What sets GeoNames apart is its hierarchical structure of place names and administrative boundaries. For instance, you can trace the relationship between a city, its region, and its country, making it valuable for fundamental geographic analysis. However, businesses should note that GeoNames faces postal code accuracy and timeliness challenges.

Important Note: While GeoNames provides postal code data for 96 countries, its global coverage is inconsistent. The depth and accuracy of the data vary significantly between regions, with some countries having comprehensive, up-to-date information while others suffer from outdated or incomplete coverage. This disparity can impact applications that rely on precise postal code postal code validation, such as e-commerce, logistics, and address validation.

For example, countries with well-maintained postal systems may have detailed and regularly updated entries. In contrast, others—especially those without open postal data—may rely on sporadic community contributions or outdated sources. This means businesses using GeoNames must carefully assess its reliability country-by-country and, in some cases, supplement it with other datasets to ensure accuracy.

| Feature | Details |

|---|---|

| Geographic Coverage | 96 countries |

| Update Frequency | Varies by region |

| Data Validation | Basic validation |

| Postal Code Coverage | Comprehensive but may be outdated |

| Integration Capabilities | Basic API access |

| Best Use Case | Global place name lookup |

| Data Format | Text/CSV |

| Technical Expertise Required | Low to Moderate |

| Additional Features | Place names, elevation data |

| Limitations | Outdated postal codes, country disparities |

While GeoNames offers international coverage with varying quality, other databases focus on specific regions with greater depth of information.

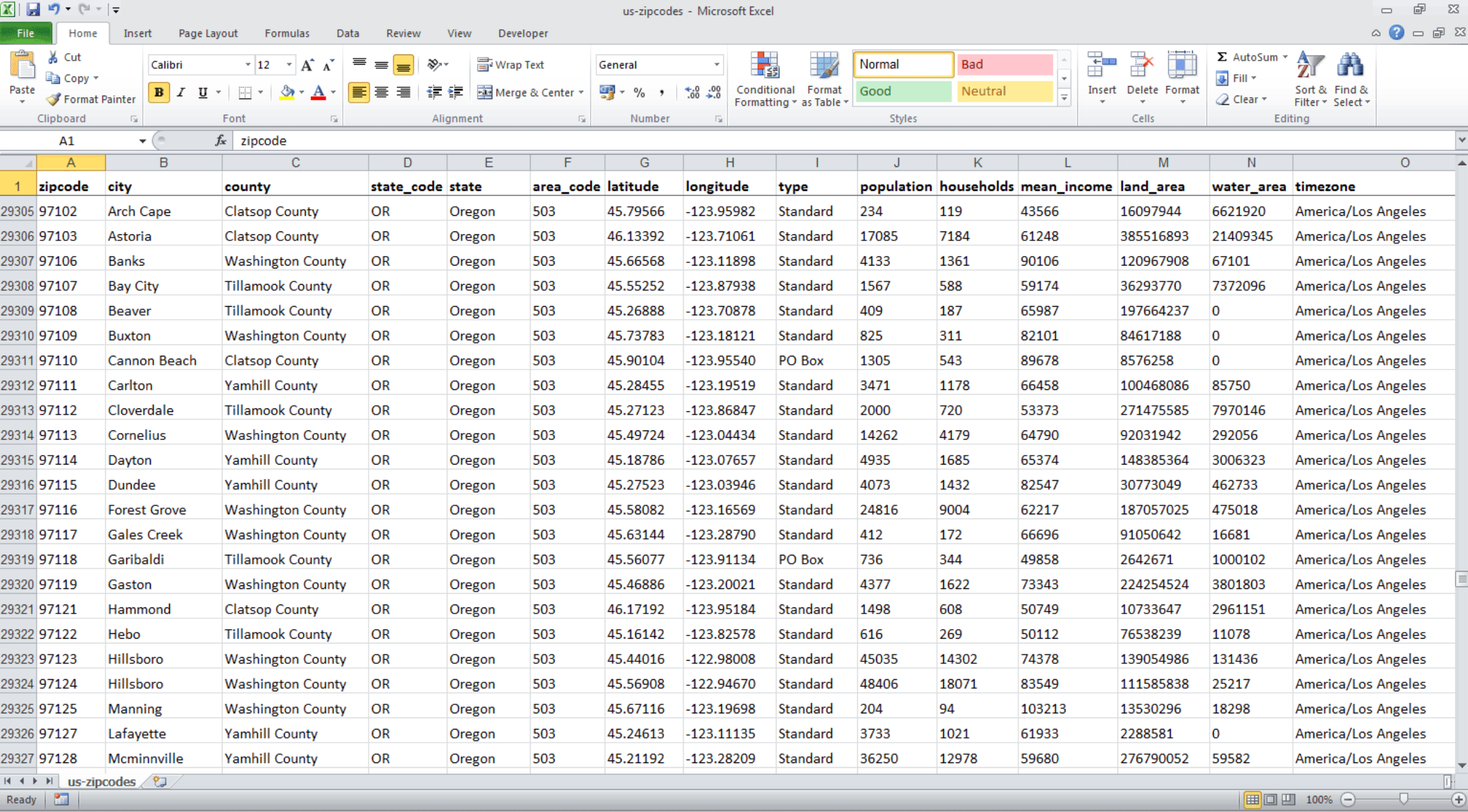

Uszipcode Database

The Uszipcode database provides specific coverage of American postal codes for businesses focusing on the United States market. This database includes fundamental information about ZIP codes, associated cities, and geographic coordinates.

The database stands out for its straightforward approach to US postal geography. It includes basic demographic data and boundary information for each ZIP code, making it useful for initial market analysis. However, businesses should consider its limitations:

Understanding ZIP Code Data Limitations

- No international coverage—strictly limited to US ZIP codes

- Refresh rates may not always match USPS updates

- Limited additional location attributes beyond basic postal information

- It can be challenging to link with other datasets due to format inconsistencies and lack of standard identifiers

While the USPS ZIP Code database is a valuable resource for U.S.-based applications, its exclusivity to the United States makes it unsuitable for businesses requiring global postal data. Additionally, ZIP codes are designed for mail delivery rather than geographic precision, meaning they don’t always align cleanly with administrative boundaries or other geospatial datasets. This can introduce challenges when merging ZIP code data with external sources, such as census data, customer databases, or mapping systems.

| Feature | Details |

|---|---|

| Geographic Coverage | United States only |

| Update Frequency | Irregular updates |

| Data Validation | USPS-maintained but may lag |

| Postal Code Coverage | Complete US coverage |

| Integration Capabilities | Database download |

| Best Use Case | US address validation |

| Data Format | CSV/Database |

| Technical Expertise Required | Low |

| Additional Features | Basic demographic data |

| Limitations | US-only coverage, no global equivalent |

Python Libraries for Location Data

Python offers several libraries for working with location data, particularly for handling postal codes and geographic queries. These tools are helpful for developers building internal applications, automating data processing, or performing location-based analysis. However, they come with varying levels of coverage, features, and limitations.

Pyzipcode: Simple but Limited

Pyzipcode provides a straightforward way to handle ZIP code operations in Python. It’s easy to implement, making it attractive for basic tasks like postal code validation and distance calculations within the U.S.

| Pros | Cons |

|---|---|

| Simple and lightweight | Limited to U.S. ZIP codes |

| Quick setup for ZIP code lookups and basic distance calculations | Lacks detailed demographic or geographic data |

| Not actively maintained |

Uszipcode: More Features, Similar Constraints

Uszipcode builds on Pyzipcode by offering a more feature-rich database, including demographic and geographic details. It provides two database options: a simple version for basic lookups and a rich version with extended attributes.

| Pros | Cons |

|---|---|

| Includes demographic and geographic data | Still limited to U.S. ZIP codes |

| Supports both simple and detailed database options | Requires database downloads, increasing setup complexity |

| Actively maintained and more flexible than Pyzipcode | Can be overkill for simple applications |

Geopy: Flexible Geocoding but Dependent on External APIs

Geopy allows developers to perform geocoding and reverse geocoding using various external providers, such as OpenStreetMap’s Nominatim, Google Maps, and Bing Maps. It is widely used for converting addresses into coordinates and vice versa.

| Pros | Cons |

|---|---|

| Supports multiple geocoding providers | Accuracy depends on the chosen geocoding provider |

| Works globally, unlike Pyzipcode and Uszipcode | Rate limits and API restrictions apply |

| Relatively easy to integrate into Python projects | Requires an internet connection to function |

Shapely: Advanced Spatial Analysis but No Built-in Data

Shapely is a powerful library for geometric operations, often used with other geospatial tools like GeoPandas and PostGIS. While it doesn’t provide location data, it helps process and analyze spatial relationships, such as determining whether a point is inside a polygon.

| Pros | Cons |

|---|---|

| Excellent for spatial operations and geometry processing | No built-in location or postal data |

| Works with other geospatial libraries like GeoPandas | It can have a learning curve for beginners |

| Supports advanced geographic calculations | Works best alongside a geospatial database like PostGIS |

Final Considerations Using Open Data from Python

Python offers powerful tools for working with location data, but each library has trade-offs. Pyzipcode and Uszipcode are helpful for simple U.S.-focused applications but fall short of global coverage. Geopy provides more flexibility but relies on third-party APIs, while Shapely is ideal for spatial analysis but lacks built-in postal data. Choosing the right tool depends on your project’s scale, scope, and requirements.

| Feature | Details |

|---|---|

| Geographic Coverage | Varies by the library, primarily US-focused, except Geopy |

| Update Frequency | – Pyzipcode: limited – Uszipcode: semi-regular – Geopy: API-dependent |

| Data Validation | – Pyzipcode: basic validation – Uszipcode, Geopy API-based: enhanced |

| Postal Code Coverage | – Pyzipcode: basic US – Uszipcode: comprehensive US – Geopy: API-dependent |

| Integration Capabilities | Python libraries, some require database downloads |

| Best Use Case | Python-based postal lookups, geocoding, spatial analysis |

| Data Format | – Pyzipcode, Uszipcode: Python objects – Geopy: API responses – Shapely: geometric data |

| Technical Expertise Required | Low to moderate, varies by library |

| Additional Features | – Geopy: Global geocoding – Uszipcode: US Demographics – Shapely: Spatial analysis |

| Limitations | – Pyzipcode: outdated – Uszipcode: US-only – Geopy: relies on external APIs |

HDX (Humanitarian Data Exchange)

The Humanitarian Data Exchange represents a unique entry in the open-source location data landscape, primarily focused on humanitarian and development contexts. Managed by the United Nations Office for the Coordination of Humanitarian Affairs (OCHA), HDX offers valuable location datasets that can serve specific business needs, particularly for organizations operating in developing regions or areas affected by humanitarian situations.

HDX Data Characteristics

- Specialized focus on humanitarian contexts

- Regular updates in crisis-affected regions

- Strong data validation protocols

- Integration with international standards

What sets HDX apart is its structured approach to data governance through UN-established protocols. While HDX maintains stricter quality control than many other open platforms, the data still comes from various humanitarian organizations, which can lead to variations in how datasets are structured and formatted.

Organizations should understand HDX’s specific focus and limitations. While it excels in providing detailed location data for humanitarian contexts, it may not offer the comprehensive commercial coverage needed for standard business operations.

HDX data may exhibit inconsistent structures between sources, spatial misalignment requiring reconciliation, and varying update frequencies prioritizing crisis regions over stable areas. These challenges are particularly relevant for businesses requiring consistent data across multiple territories.

Important Considerations

- Inconsistent Data Structure: Datasets on HDX come from various humanitarian organizations, meaning formats, naming conventions, and attribute structures can vary significantly. This lack of standardization can complicate integration into existing workflows.

- Shape Alignment Issues: Geographic boundaries and spatial data from different sources may not always align correctly, leading to inconsistencies when merging datasets. Users may need to clean and reconcile overlapping or mismatched polygons manually.

- Outdated Data: While HDX prioritizes crisis-affected regions, updates can be infrequent for stable areas. Businesses relying on HDX should verify data freshness, especially for use cases requiring up-to-date administrative boundaries or infrastructure details.

While HDX provides valuable location data, its humanitarian focus means businesses must assess whether the data structure, update frequency, and spatial consistency meet their needs before integrating it into their location data strategy.

| Feature | Details |

|---|---|

| Geographic Coverage | Global (humanitarian focus) |

| Update Frequency | Crisis-driven updates |

| Data Validation | Strict UN protocols |

| Postal Code Coverage | Varies by region |

| Integration Capabilities | API and bulk download |

| Best Use Case | Humanitarian operations |

| Data Format | Multiple standardized formats |

| Technical Expertise Required | Moderate |

| Additional Features | Humanitarian indicators |

| Limitations | Crisis-area focus, inconsistent structure, misaligned shapes |

Natural Earth Data

Natural Earth Data is a widely used open-source geographic dataset providing administrative boundaries, physical geography, and cultural landmarks at a global scale. It is designed for cartographic applications, offering clean and visually appealing data in various resolutions (1:10m, 1:50m, and 1:110m scales). Its primary advantage is the balance between accuracy, completeness, and ease of use, making it a popular choice for mapmakers and analysts looking for a lightweight, general-purpose geographic dataset.

Key Strengths

Consistent and Well-Structured Data

Natural Earth standardizes its datasets, ensuring a uniform structure across administrative boundaries, coastlines, rivers, and populated places. This makes it easier to integrate into GIS and mapping workflows compared to some other open datasets.

Multiple Resolutions for Different Use Cases

The availability of low, medium, and high-resolution datasets allows users to select the appropriate level of detail for their needs, from large-scale global maps to more detailed regional analysis.

Seamless Integration with GIS Tools

The data is provided in user-friendly formats (Shapefile and GeoJSON), making it compatible with tools like QGIS, ArcGIS, and PostGIS without requiring extensive preprocessing.

No Licensing Restrictions

The data is in the public domain, meaning businesses and individuals can use, modify, and distribute it freely, even for commercial purposes.

Includes Complementary Geographic Features

Unlike datasets that focus purely on administrative boundaries or postal codes, Natural Earth includes physical geography (rivers, lakes, elevation contours) and cultural features (populated places, urban areas), which can be helpful for broader spatial analysis.

Limitations & Challenges

Lack of Granularity in Administrative Boundaries

While Natural Earth provides global administrative boundaries, these are not always the most up-to-date or detailed. The dataset prioritizes consistency over precision, meaning national and regional boundaries might be simplified or outdated compared to authoritative sources like national mapping agencies.

No Postal Code Coverage

Businesses that need postal code boundaries for logistics, address validation, or demographic analysis will find Natural Earth unsuitable for these use cases. It must be supplemented with datasets like OpenStreetMap or national postal datasets.

Potential Misalignment with Other Datasets

While well-structured data may not always align perfectly with other geographic datasets, especially those derived from different projection systems or data sources, users working with multiple datasets may need manual adjustments to ensure spatial consistency.

Data Update Frequency & Timeliness

Natural Earth is updated periodically but not as frequently as datasets maintained by national governments or commercial providers. Administrative boundaries may lag behind real-world changes, especially in politically dynamic regions.

| Feature | Details |

|---|---|

| Geographic Coverage | Global |

| Update Frequency | Periodic but not frequent |

| Data Validation | Standardized but may not reflect the latest changes |

| Postal Code Coverage | No postal codes |

| Integration Capabilities | GIS-compatible formats (Shapefile, GeoJSON) |

| Best Use Case | Cartography and global geographic reference |

| Data Format | Shapefile, GeoJSON |

| Technical Expertise Required | Low |

| Additional Features | Physical geography, administrative boundaries |

| Limitations | Low-resolution boundaries, outdated in some regions |

GADM (Database of Global Administrative Areas)

GADM stands out for its detailed hierarchical representation of administrative boundaries, from national borders down to local administrative units. This level of detail makes it particularly useful for:

- Visualizing jurisdictional boundaries for regulatory compliance

- Analyzing demographic data at various administrative levels

- Creating choropleth maps for regional analysis

- Supporting location-based policy implementation

However, businesses should be aware that GADM comes with specific usage restrictions. While free for academic and non-commercial use, commercial applications typically require additional licensing, which may limit its applicability for some business models.

Important Note: GADM’s main strength lies in its administrative boundary polygons rather than postal codes or address data. Organizations requiring address validation or postal code lookup functionality will need to supplement GADM with other datasets.

| Feature | Details |

|---|---|

| Geographic Coverage | Global |

| Update Frequency | Periodic releases (not real-time) |

| Data Validation | Curated by researchers |

| Postal Code Coverage | None (administrative boundaries only) |

| Integration Capabilities | GIS-compatible formats (Shapefile, GeoJSON, etc.) |

| Best Use Case | Administrative boundary analysis |

| Data Format | Shapefile, GeoPackage, KMZ, R-raster |

| Technical Expertise Required | Moderate |

| Additional Features | Multi-level administrative hierarchies |

| Limitations | Commercial use restrictions, no postal data |

Conclusion

Throughout this article, we’ve explored the landscape of open-source location databases, examining their features, strengths, and limitations. These resources represent remarkable achievements in collaborative data development and offer valuable options for many business applications.

Open-source location data can be valuable in specific scenarios:

- Initial exploratory analysis before committing to paid solutions

- Projects with limited budgets, especially non-profits and educational institutions

- Applications focusing on well-covered urban areas in developed regions

- Cases where community-updated information provides unique insights

- Situations where customization and control over data processing are paramount

It’s important to acknowledge that depending on your use case and skillsets, using open-source data can either be the best, cheapest choice, or a tedious, expensive process. The quality and coverage vary significantly across different regions, and the technical expertise required to process, standardize, and maintain this data should not be underestimated.

A scenario we’ve witnessed multiple times involves organizations starting with open-source data and realizing months into development that the data quality or amount of work needed to polish it does not fit their business needs. This can result in significant delays and rework that could have been avoided with a more thorough initial assessment.

For businesses requiring consistent global coverage, standardized formats, regular updates, and reliable support, professional location databases may ultimately provide better long-term value despite the initial investment. These solutions can help overcome the limitations in coverage, structure, and maintenance that often challenge users of open-source data.

Whether you choose open-source or commercial location data depends on your specific business requirements, technical capabilities, budget constraints, and risk tolerance. By understanding the options presented in this article, you can make a more informed decision about the right approach for your location data strategy.

For organizations requiring reliable, standardized location data with global coverage and dedicated support, GeoPostcodes offers comprehensive solutions that may complement or enhance your existing data ecosystem. Browse GeoPostcodes database and download a free sample here.

FAQ

How can raw data like climate data or geology maps be used in a Geographic Information System?

A Geographic Information System (GIS) integrates various types of raw data, such as climate data and geology maps, to produce meaningful interactive maps. By loading this data into your mapping software, you can visualize environmental patterns and analyze spatial relationships. GIS tools transform complex datasets into actionable insights, accessible through easy-to-use web services and engaging interactive maps.

What open-source tools support LiDAR data and other GIS formats?

Open-source GIS tools like QGIS handle various datasets, including LiDAR data, standard GIS layers, and other GIS formats. They easily integrate lidar data alongside vector and raster datasets, allowing detailed analysis of geographic, demographic, and environmental information within a unified GIS platform.

Where can I find open-source data?

Find open-source location data through platforms like OpenStreetMap, Natural Earth, GADM, UN Open GIS, government portals (data.gov), Humanitarian Data Exchange, and NASA Earth Data. Universities, research institutions, and specialized repositories like OpenAddresses and GeoNames also offer free spatial datasets with varying coverage and accuracy.

Are there any open-source maps?

Yes, several robust open source maps exist. OpenStreetMap leads as a community-built global mapping platform. Alternatives include MapLibre GL, OpenLayers, Leaflet, and QGIS. These platforms provide customizable base maps, while Kepler.gl and D3.js offer visualization frameworks for creating interactive maps using open location data.

How to get geolocation data?

Obtaining geolocation data through geocoding APIs (Nominatim, Photon), government census databases, open repositories (GeoNames, OpenAddresses), mobile device GPS, IP address lookup services, specialized datasets from universities, and direct collection via GPS devices is an option. However, open source data has obvious limitations and can lead to financial and legal risks. For commercial applications, verifying quality and compliance with relevant privacy regulations is very important.